![PDF] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | Semantic Scholar PDF] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/df2b0e26d0599ce3e70df8a9da02e51594e0e992/3-Figure1-1.png)

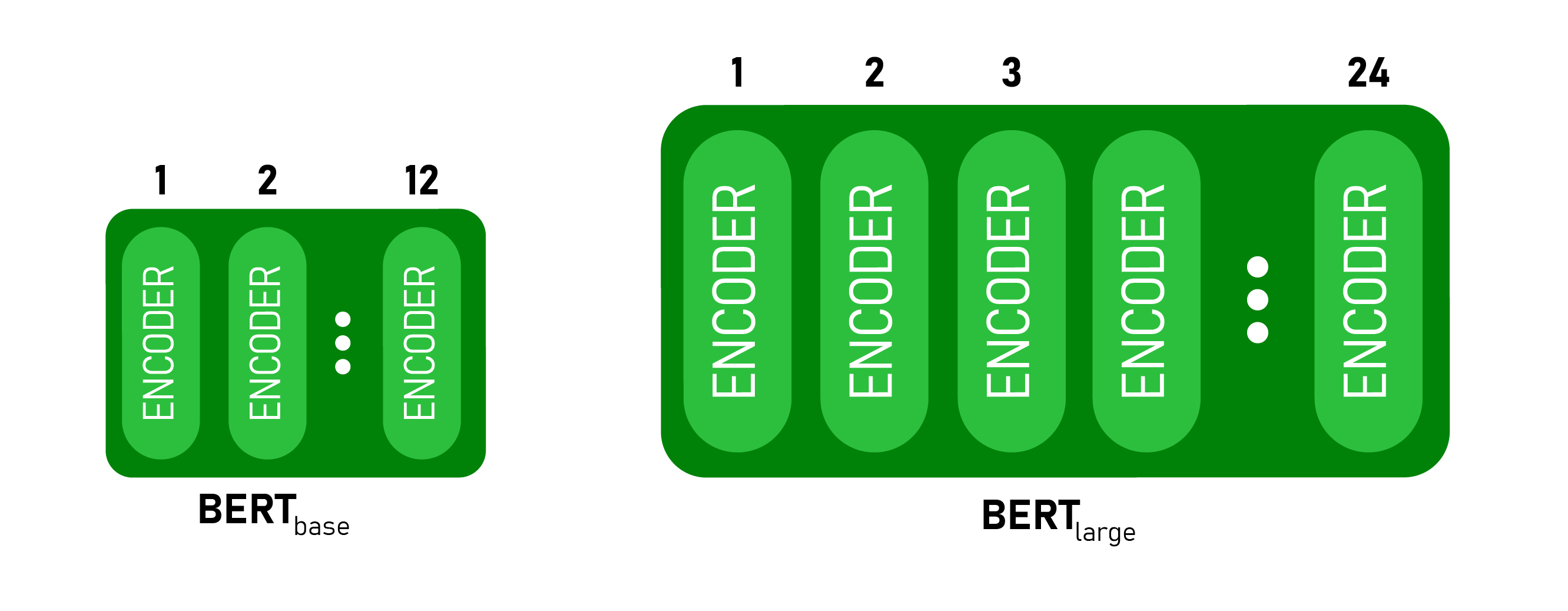

PDF] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding | Semantic Scholar

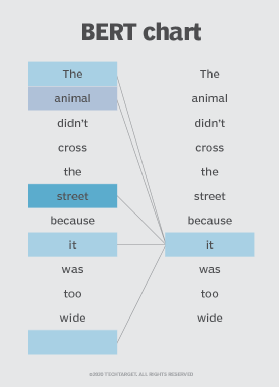

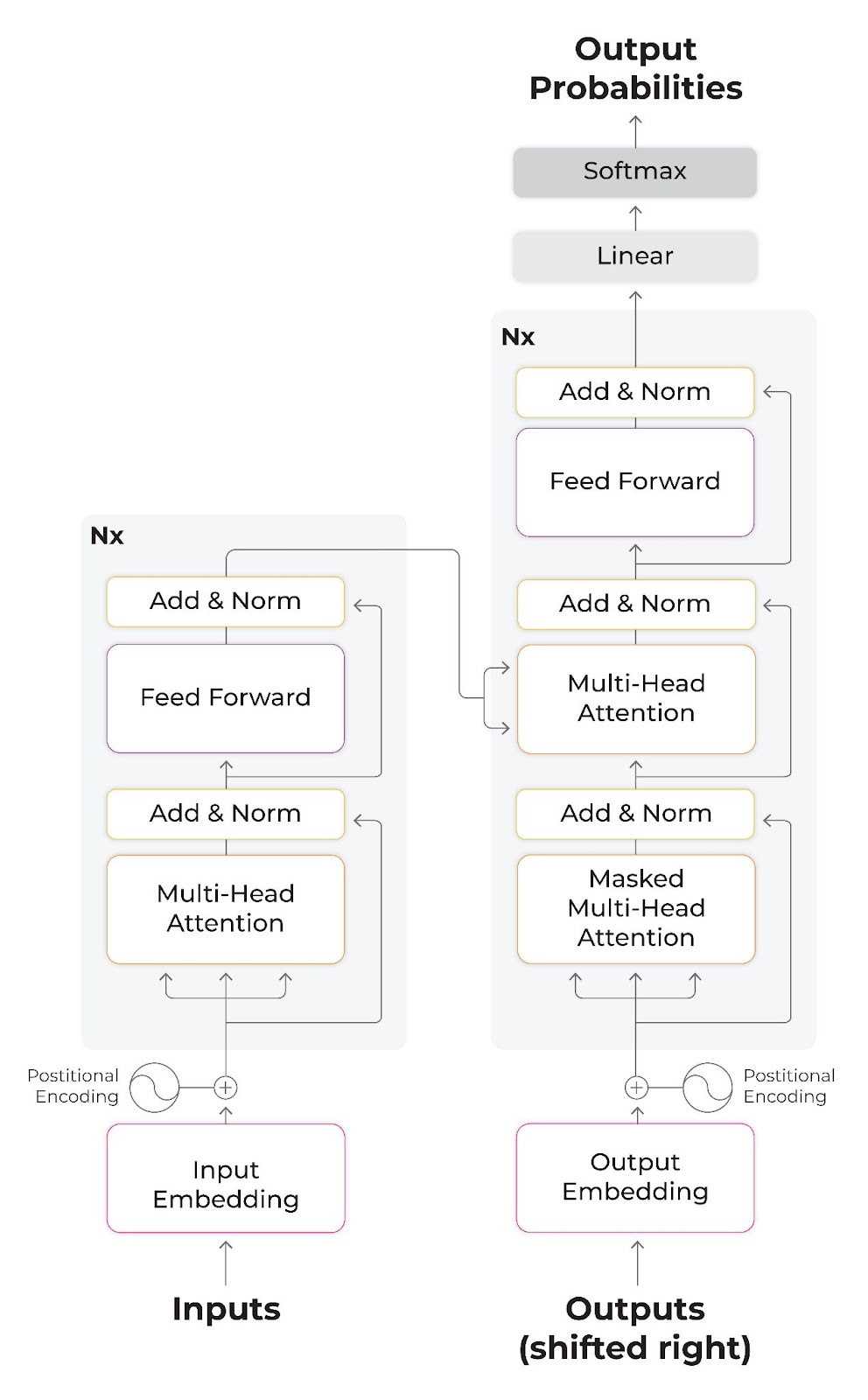

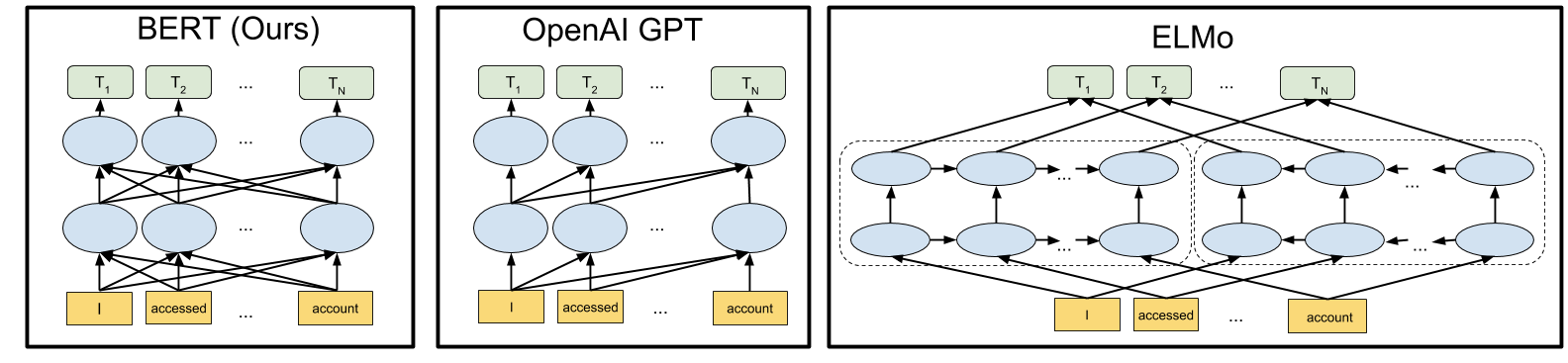

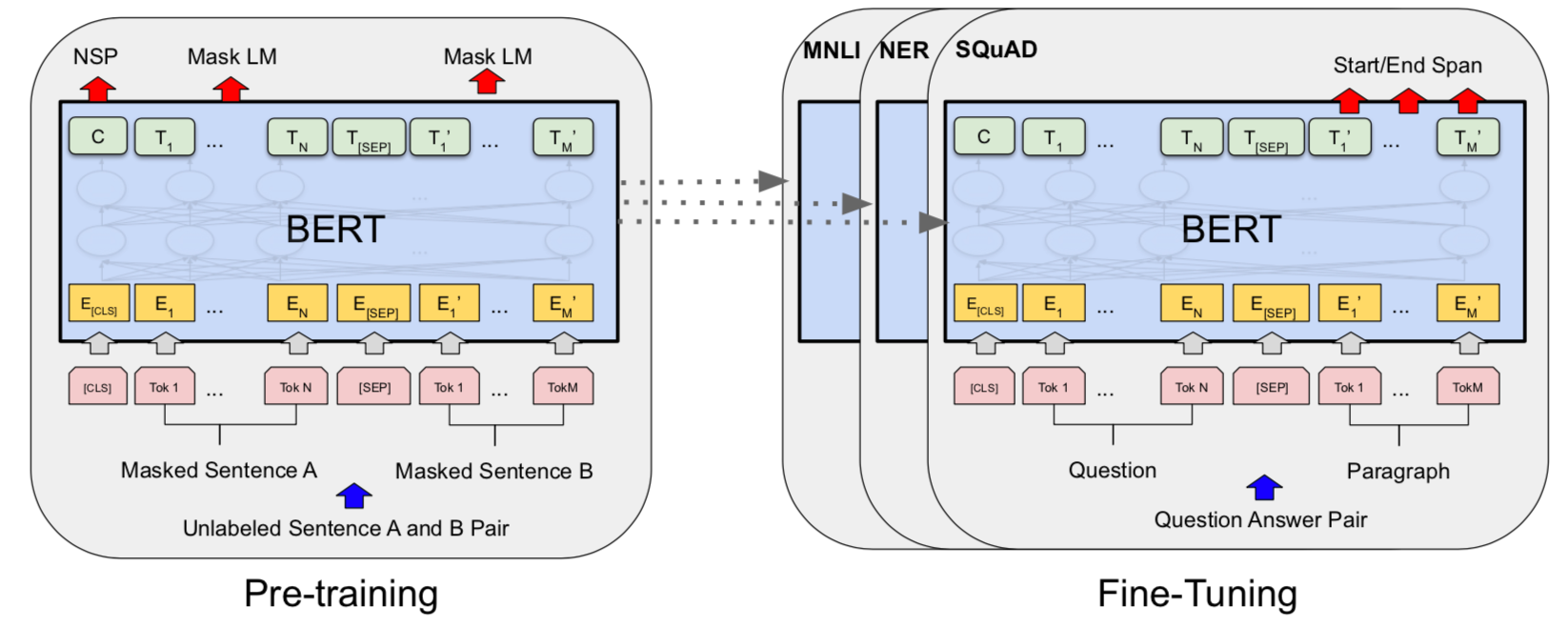

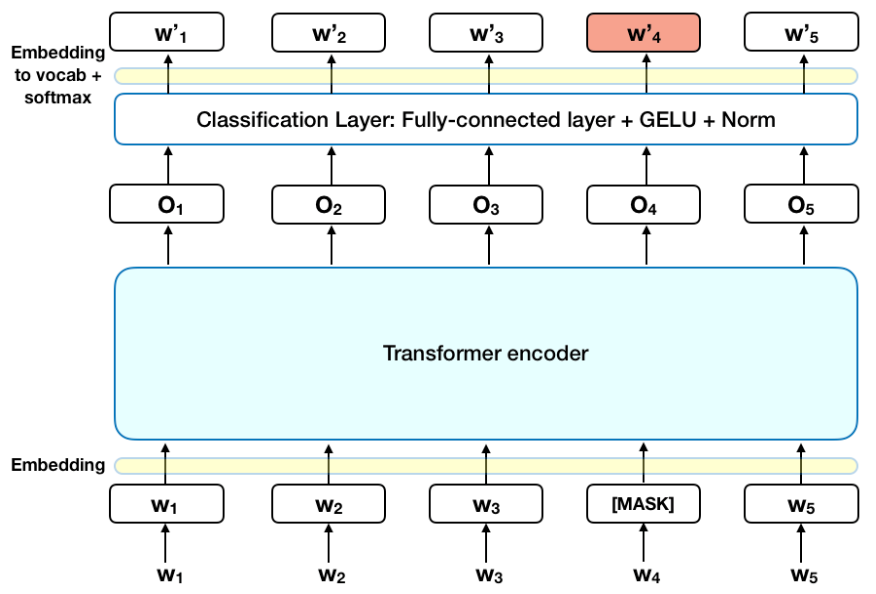

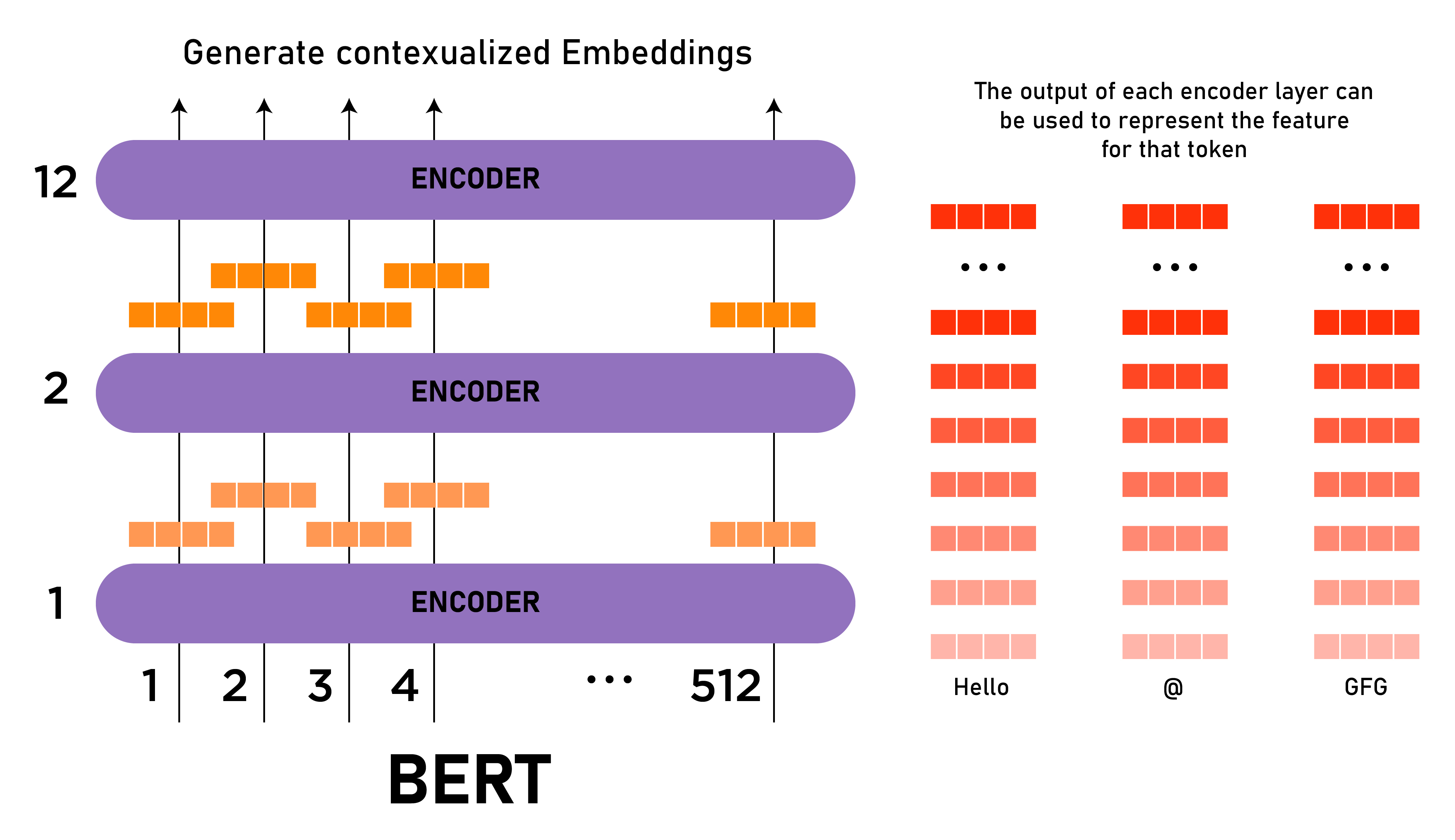

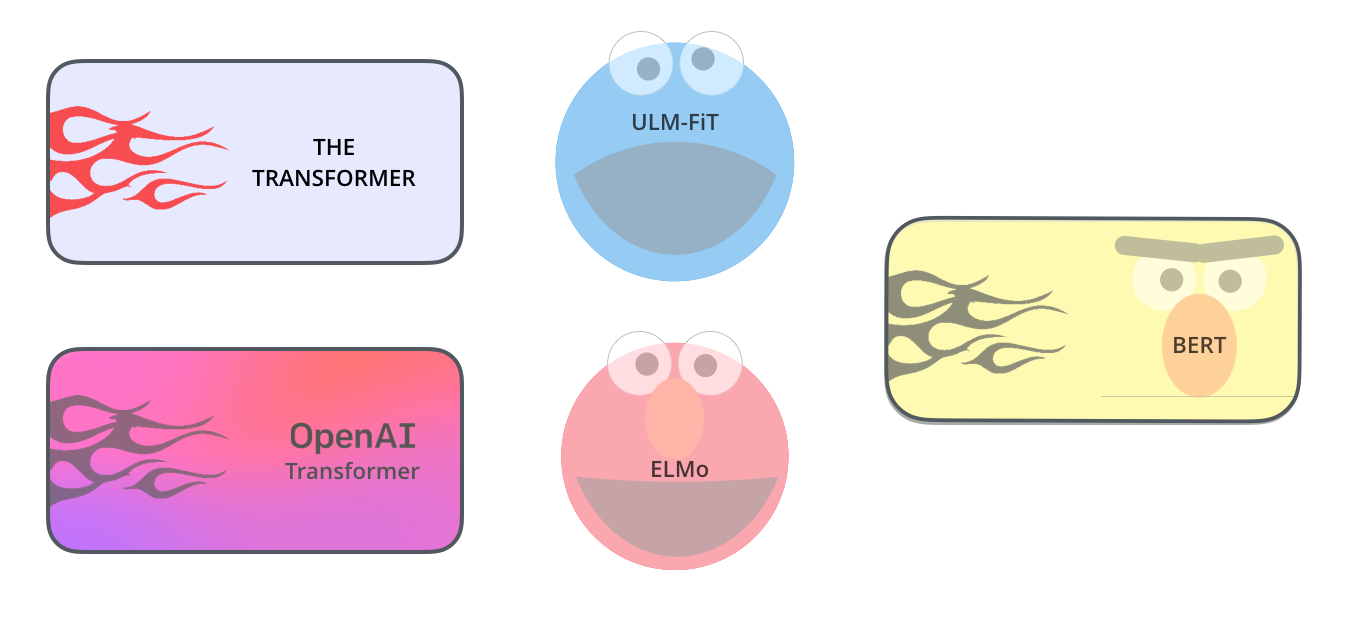

The Illustrated BERT, ELMo, and co. (How NLP Cracked Transfer Learning) – Jay Alammar – Visualizing machine learning one concept at a time.

Paper summary — BERT: Bidirectional Transformers for Language Understanding | by Sanna Persson | Analytics Vidhya | Medium

![PDF] AlBERTo: Italian BERT Language Understanding Model for NLP Challenging Tasks Based on Tweets | Semantic Scholar PDF] AlBERTo: Italian BERT Language Understanding Model for NLP Challenging Tasks Based on Tweets | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/e1e43d6bdb1419e08af833cf4899a460f70da26c/3-Figure1-1.png)

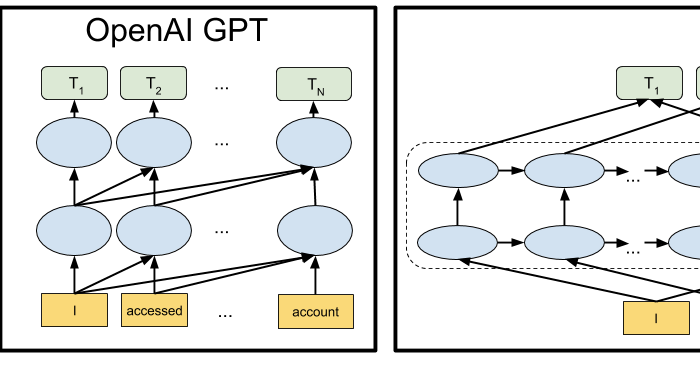

PDF] AlBERTo: Italian BERT Language Understanding Model for NLP Challenging Tasks Based on Tweets | Semantic Scholar

Language Understanding with BERT. The most useful deep learning model | by Cameron R. Wolfe | Towards Data Science